Collecting Our Recordings

Impulse Responses

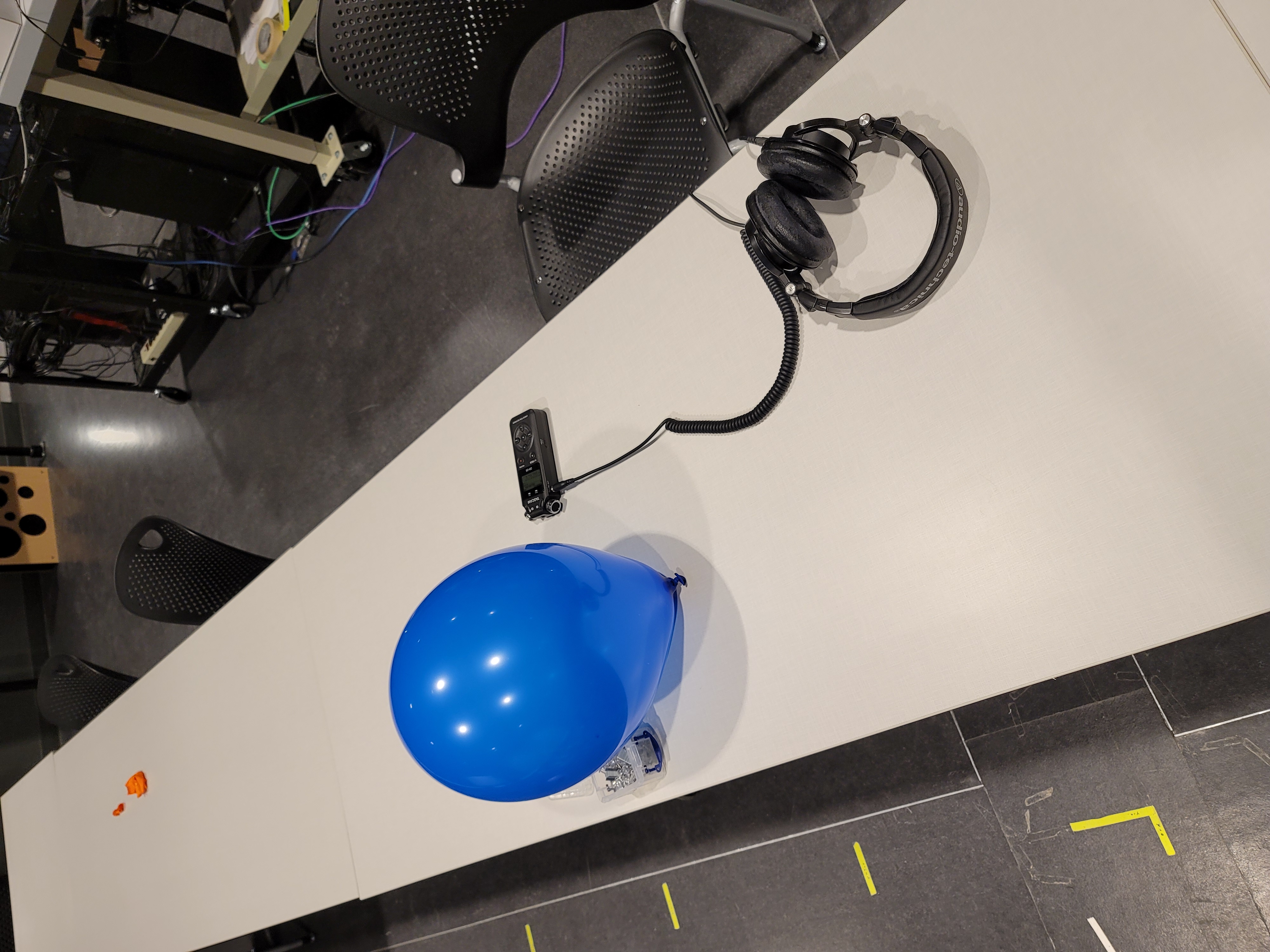

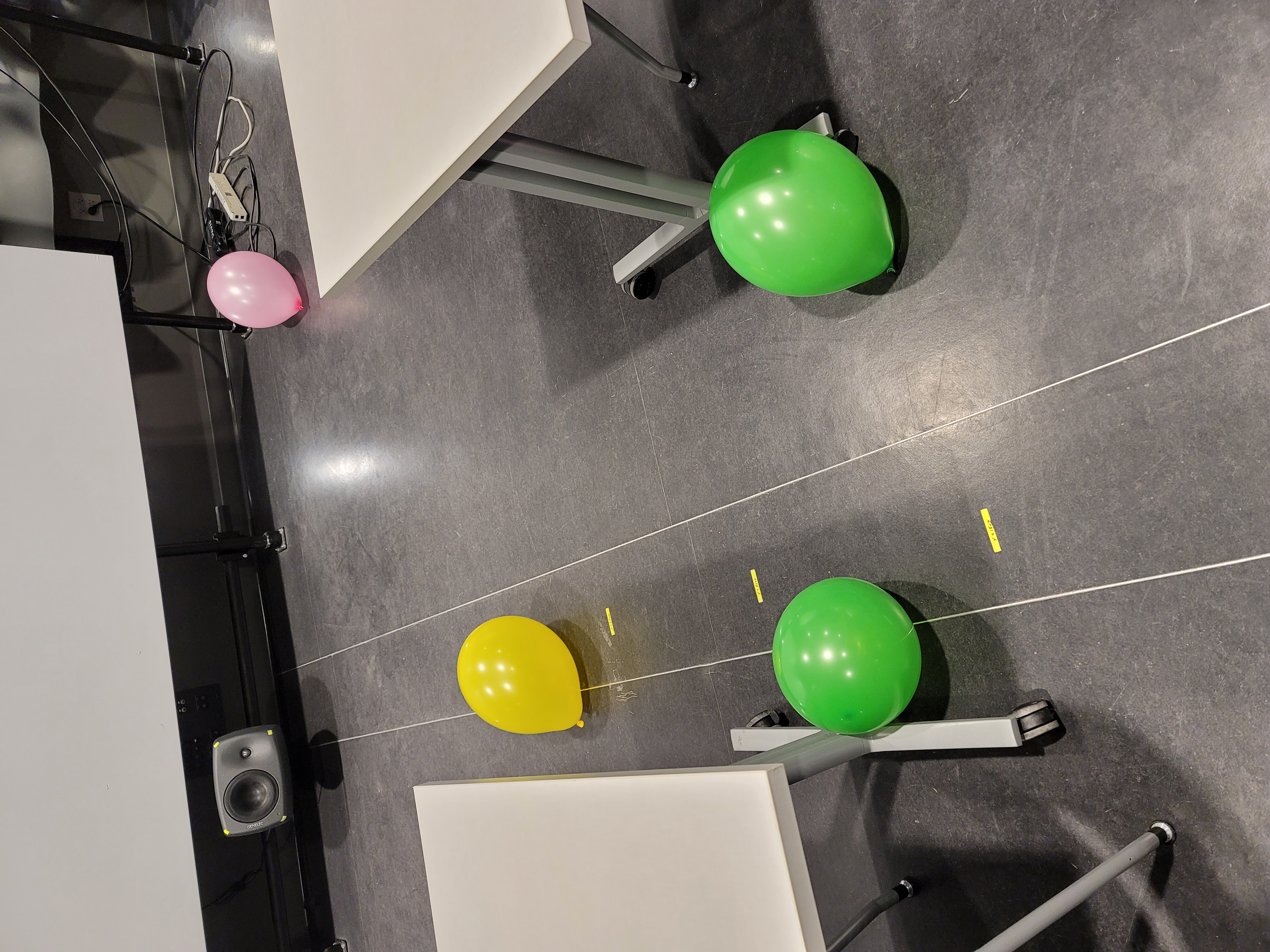

Impulse Responses (IRs) are used in digital signal processing to encapsulate the frequency response information of a given system or space. In the audio domain, we can use an impulse response for a space to capture its reverberation characteristics. There are several ways to capture an impulse response for a room (1), all of which require a short, loud sound that covers the frequency spectrum. We took several high-quality recordings in the Chip Davis Studio of balloons popping, which acted as our IR recordings. We also recorded a short clip of Jackson's voice, which we attempted to perform regular deconvolution on alongside a few other recordings. The balloon and voice recordings were recorded with a sample rate of 96kHz and a bit depth of 24 bits. We varied the distance from balloon to mic on every recording in order to discover what distance worked best for producing an impulse response. Other aspects of the balloon popping (e.g. amount each balloon was blown up) were kept as similar as possible from recording to recording.

Our balloon popping setup

Our balloon popping setup

Standard balloon fill size

Standard balloon fill size

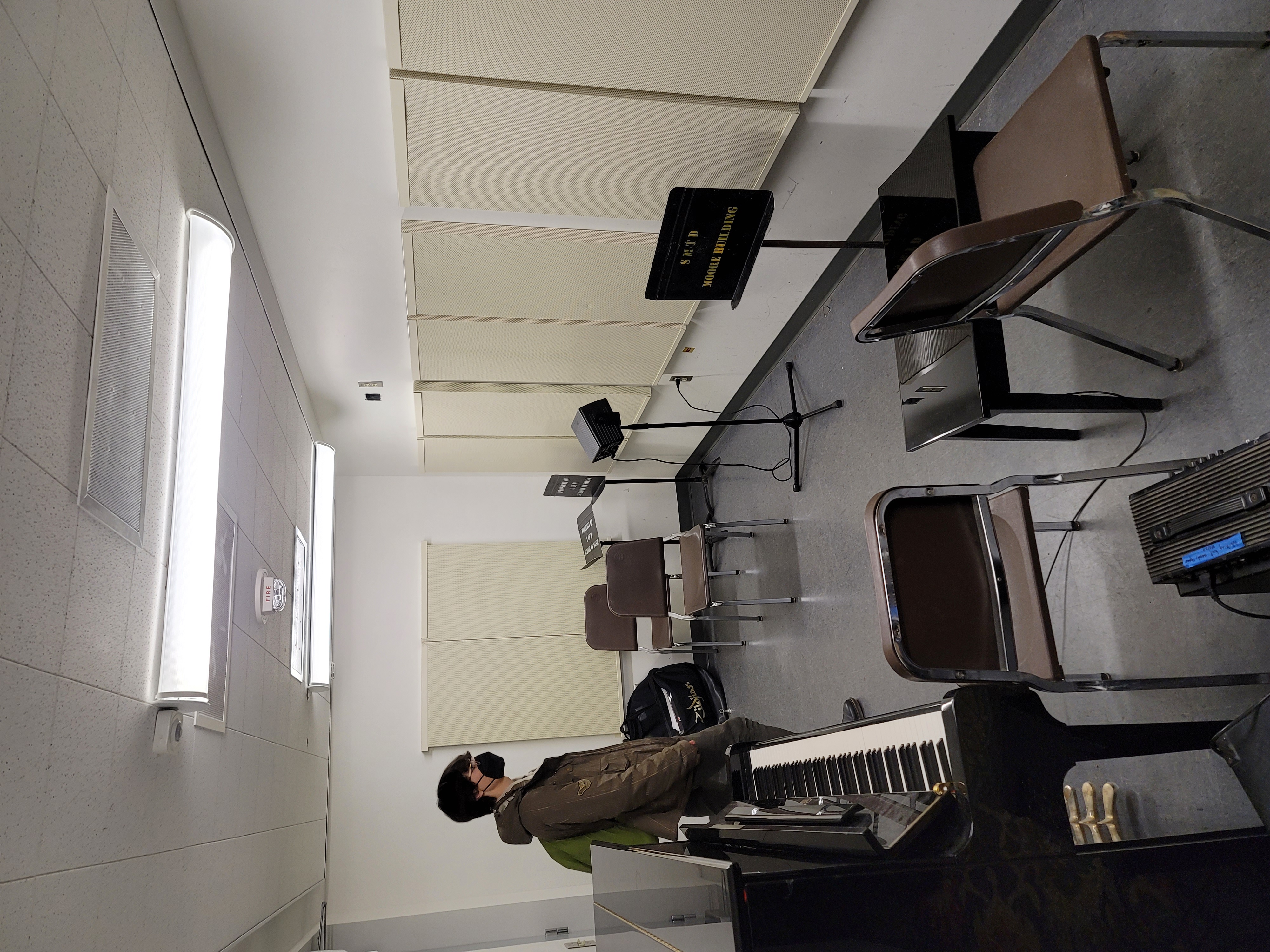

Our voice recording setup

Our voice recording setup

volume just in case) Our sample wet recording (vocals)

Improved Impulse Response Recordings

-

When doing our initial tests with known IR deconvolution, we found it difficult to hear whether our algorithm was working properly on the Davis recordings. This was because the space, Davis Studio, was relatively dry (had a short reverb tail). We decided it would be best to go back and take another round of impulse response recordings in several rooms of varying sizes, some with more obvious reverb.

We also wanted to have a variety of test recordings to see how our algorithm works at varying frequency ranges. Several short audio clips were selected for this, mostly taken from Logic Pro’s default sample library:

Drum loop - The same sample used for our previous convolution/deconvolution tests. We wanted to see how our process would work on a percussive sound with lots of transients.

Cello - A short loop of solo cello. How would deconvolution handle a low/ midrange instrument?

Flute - A short sample of an (African bamboo flute). How would deconvolution handle a higher frequency instrument?

Speech (Jackson’s Voice) - the same recording of the spoken phrase “I am sitting in a room” as used earlier. We had intended to re-record to get an even drier recording, but were not able to coordinate in time. Ultimately, we decided that the original recording was dry enough that this wouldn’t create any major issues.

These 4 recordings (in addition to a single sample “click” to obtain the IR) were played into the selected spaces with an Anchor Audio AN-1000X+ Speaker Monitor which we were able to borrow from the Davis Studio control room. Though this portable speaker was relatively high quality, there was some concern over whether the necessarily imperfect frequency response of the speaker would affect our results. One optimistic prediction was that perhaps the speaker itself would be filtered out due to the impulses also being played through the speaker. If this was not the case, we figured we would still be able to hear the difference between the wet and deconvoluted versions well enough to determine if our code was working. After all, our goal in this was not to perfectly reconstruct these particular recordings, but to build a tool that could be used to reconstruct reverberant recordings in general.

In total, we were able to obtain recordings in 4 different rooms throughout the Moore building. Our process was to first set up the speaker, position our recorder (the Tascam DR-05) elsewhere in the room, run a quick level check, and then play the various sound clips off a laptop hooked up to the speakers. There was again some concern over whether the laptop’s sound card or the aux cable would introduce more interference, but we ultimately decided that these changes would be much smaller than the imperfections caused by using a speaker in the first place.

The first space we recorded in was Kevreson Rehearsal Hall. The room was very large, rectangular, and had high ceilings, but also had some acoustic treatment on the walls. It provided a medium length reverb response. Our speaker was positioned in the room’s corner, facing the recorder which was near the room’s center.

The second room we chose was a jazz practice room. This room was small, oblong, and had some metal acoustic panels. It also contained a drum set which would sometimes resonate sympathetically with the audio played. This raised some concerns, but we figured that it could be considered another feature of the space. If these types of features caused our algorithm to fail, it would be important to know that. The speaker was pointed towards a wall, and was about 5’ from the recorder.

For our third room, we wanted a very reverberant space but were struggling to find one with an available outlet. Eventually, we found a large square lobby with no acoustic treatment that was very reverberant. One difficulty was that this area had some amount of foot traffic and background noise so we had to wait for moments when the room was unoccupied and quiet. The speaker was positioned in the corner, facing the recorder at the opposite corner.

Our final space was a small room at the entrance to an elevator that was entirely untreated. As a result, this space had a short delay and obvious comb filter effect. The speaker was placed in the corner with the recorder on the other end.

With 4 spaces and 4 test sounds played in each, we figured we would have more than enough material to work with. Our goal was to take all the impulse responses and recordings we would need so that we could turn our full attention to the code. Even if certain recordings turned out poorly, it was likely that some combinations of rooms and audio clips would be useful for testing. The variety of each would help us determine the limitations of our approaches as well.

Since March 22nd, we've recorded IRs and sample audio in 4 more spaces using what we learned from our first attempts. For example, because MATLAB processes high-sample-rate files so slowly, we opted for a sample rate of 44.1 kHz this time around. We also recorded a larger variety of sample audio in different parts of the frequency spectrum to more accurately test our deconvolution algorithm. The rooms we used were the Kevreson Rehearsal Hall, a small jazz ensemble practice room, a larger lobby, and a small rectangular room, all of which were located in the Moore building on North Campus.

Kevreson Rehearsal Hall

Kevreson Rehearsal Hall

Small Jazz Practice Space

Small Jazz Practice Space

Larger Lobby Area

Larger Lobby Area

Small Basement Room

Small Basement Room

Because there are so many resultant audio files from this process, we've included a link to all the files here.